We are long into the marketing hype cycle on cloud. That means that clear criteria to assess and evaluate the different cloud options are critical. Given these complexities, what approach should the medium to large enterprise take to best leverage cloud and optimize their data center? What are the pitfalls as well? While cloud computing is often presented as homogenous, there are many different types of cloud computing from infrastructure as a service (IaaS) to software as a service (SaaS) and many flavors in between. Perhaps some of the best examples are Amazon’s infrastructure services (IaaS), Google’s Email and office productivity services (SaaS), and Salesforce.com’s customer relationship management or CRM services (SaaS). Typically, the cloud is envisioned as an accessible and low cost compute utility in the sky that is always available. Despite this lofty promise, companies will need to select and build their cloud environment carefully to avoid fracturing their computing capabilities, locking themselves into a single, higher cost environment or impacting their ability to differentiate and gain competitive advantage – or all three.

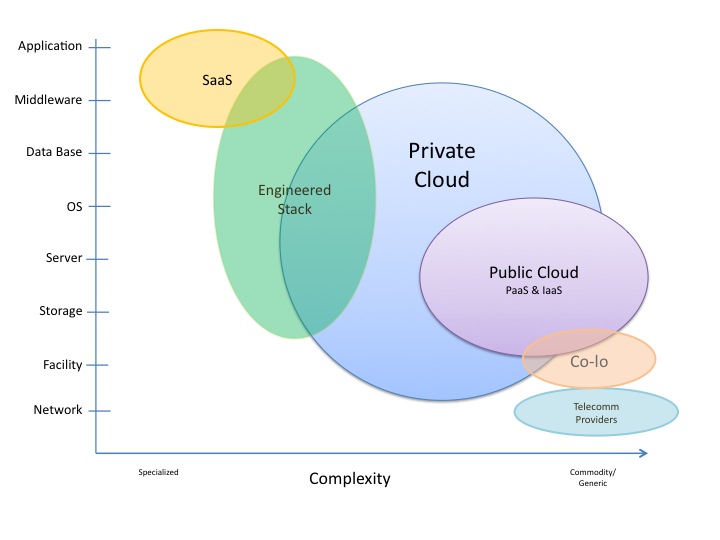

The chart below provides an overview of the different types of cloud computing:

Note the positioning of the two dominant types of cloud computing:

- there is the specialized Software-as-a-Service (SaaS) where the entire stack from server to application (even version) are provided — with minimal variation

- there is the very generic IaaS or PaaS where a set of server and OS version(s) is available with types of storage. Any compatible database, middleware, or application can be installed to then run.

Other types of cloud computing include private cloud – essentially IaaS that an enterprise builds for itself. The private cloud variant is the evolution of the current corporate virtualized server and storage farm to a more mature instance with clearly defined service configurations, offerings, billing as well as highly automated provisioning and management.

Another impacting technology in the data center is engineered stacks. These are a further evolution of the computer appliances that have been available for decades. Engineered stacks are tightly specified, designed and engineered components integrated to provide superior performance and cost. These devices have typically been in the network, security, database and specialized compute spaces. Firewalls and other security devices have long leveraged an this approach where generic technology (CPU, storage, OS) is closely integrated with additional special purpose software and sold and serviced as an packaged solution. There has been a steady increase in the number of appliance or engineered stack offerings moving further into data analytics, application servers, and middleware.

With the landscape set it is important to understand the technology industry market forces and the customer economics that will drive the data center landscape over the next five years. First, the technology vendors will continue to invest and increase the SaaS and engineered stack offerings because they offer significantly better margin and more certain long term revenue. A SaaS offering gets a far higher Wall Street multiple than traditional software licenses — and for good reason — it can be viewed as a consistent ongoing revenue stream where the customer is heavily locked in. Similarly for engineered stacks, traditional hardware vendors are racing to integrate as far up the stack as possible to both create additional value but more importantly enable locked in advantage where upgrades, support and maintenance can be more assured and at higher margin than traditional commodity servers or storage. It is a higher hurdle to replace an engineered stack than commodity equipment.

The industry investment will be accelerated by customer spend. Both SaaS and engineered stacks provide appealing business value that will justify their selection. For SaaS, it is speed and ease of implementation as well as potentially variable cost. For engineered stacks, it is a performance uplift at potentially lower costs that often makes the sale. Both SaaS and engineered stacks should be selected where the business case makes sense but with the cautions of:

- for SaaS:

- be very careful if it is core business functionality or processes, you could be locking away your differentiation and ultimate competitiveness.

- know how you will get your data back before you sign should you stop using the SaaS

- make sure you have ensured integrity and security of your data in your vendor’s hands

- for engineered stacks:

- understand where the product is in its lifecycle before selecting

- anticipate the eventual migration path as the product fades at the end of its cycle

For both, ensure you avoid integrating key business logic into the vendor’s product. Otherwise you will be faced with high migration costs at the end of the product life or when there is a better compelling product. There are multiple ways to ensure that your key functionality and business rules remain independent and modular outside of the vendor service package.

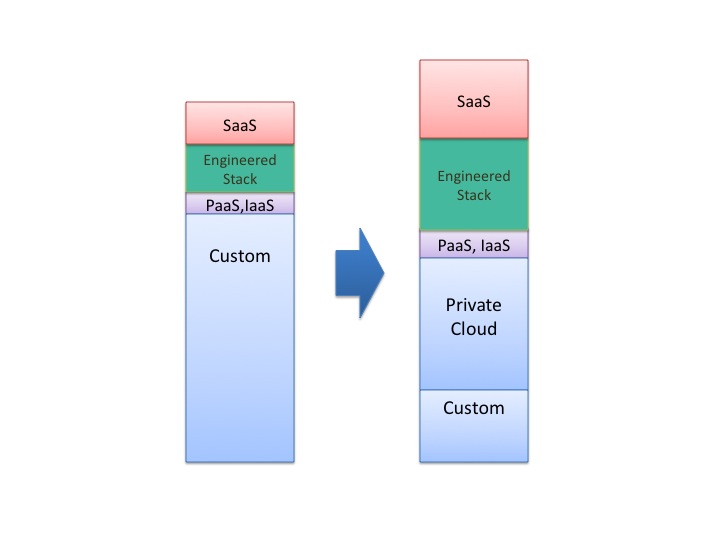

With these caveats in mind, and a critical eye to your contract to avoid onerous terms and lock-ins, you will be successful with the project level decisions. But you should drive optimization at the portfolio level as well. If you are a medium to large enterprise, you should be driving your internal infrastructure to mature their offering to an internal private cloud. Virtualization, already widespread in the industry, is just the first step. You should move to eliminate or minimize your custom configurations (preferably less than 20% of your server population). Next, invest in the tools and process and engineering so you can heavily automate the provisioning and management of the data center. Doing this will also improve the quality of service).

Make sure that you do not shift so much of your processing to SaaS that you ‘balkanize’ your own utility. Your data center utility would then operate subscale and inefficiently. Should you overreach, expect to incur heavy integration costs on subsequent initiatives (because your functionality will be spread across multiple SaaS vendors in many data centers). And you can expect to experience performance issues as your systems operate at WAN speeds versus LAN speeds across these centers. And expect to lose negotiating position with SaaS providers because you have lost your ‘in-source’ strength.

I would venture that over the next five year the a well-managed IT shop will see:

- the most growth in its SaaS and engineered stack portfolio,

- a conversion from custom infrastructure to a robust private cloud with a small sliver of custom remaining for unconverted legacy systems

- minimal growth in PaaS and IaaS (growth here is actually driven by small to medium firms)

So, on the road to a cloud future, SaaS and engineered stacks will be a part of nearly every company’s portfolio. Vendor lock-in could be around every corner, but good IT shops will leverage these capabilities judiciously and develop their own private cloud capabilities as well as retain critical IP and avoid the lock-ins. We will see far greater efficiency in the data center as custom configurations are heavily reduced. So while the prospects are indeed ‘cloudy’, the future is potentially bright for the thoughtful IT shop.

What changes or guidelines would you apply when considering cloud computing and the many offerings? I look forward to your perspective.

This post appeared in its original version at Information Week January 4. I have extended and revised it since then.

Best, Jim Ditmore

As always, great post Jim. Two points that I would strongly emphasize, as they are often forgotten- are:

1- The point mentioned that companies should be cautious about their core competitive strengths- and how external clouds and SaaS will influence them. Many groups are getting this wrong by keeping what they shouldn’t and outsourcing what should have been kept. Often they try to hit the home run with their first swing, when they should have just taken the base hit to set themselves up for the long run.

2- The movement towards private clouds for medium to large enterprises. On this point specifically- many companies try out the expensive, still-in-the-package services and products advertising “click of the button” automated provisioning of infrastructure. Only after bringing in significant licensing and project hours, they realize that just as in point 1 above- they should have bunted the runner over to third. You don’t have to completely eliminate the SDLC cycle. The SDLC does a lot of right sizing, requirements gathering, and capacity management that is essential for enterprise efficiency. For the majority of companies- bringing system builds and infrastructure deployments down to <15 days would be a godsend from their 45+ day current deployment schedule. Automation is key.

Again- great article Jim. Good stuff for '13.

James

James,

Excellent points and well said. Better planning and thoughtful analysis can avoid so many major missteps – in this case in the infrastructure space. Thanks for the comment! Best Jim

Hi Jim,

Excellent article. I would like to know more about how one should avoid building key business logic into vended products. Perhaps this is out of the scope of the this discussion on cloud computing but from a more general point of view, how would you approach this?

As a product architect I am advising clients on software life cycle management applications with a goal to reflecting their SDLC processes in those tools. One can narrow the “choice” of process to say waterfall or agile but I am sure there will be customisations. Would it be better to restrict to the two processes in this case?

I was also thinking of workflow tools used to automate business processes. How would one avoid locking oneself into those tools?

Hope I’ve made sense here :-).

Allen