A recent study by Intel shows that the compute load that required 184 single-core processors in 2005 now can be handled with just 21 processors where every nine servers are replaced by one.

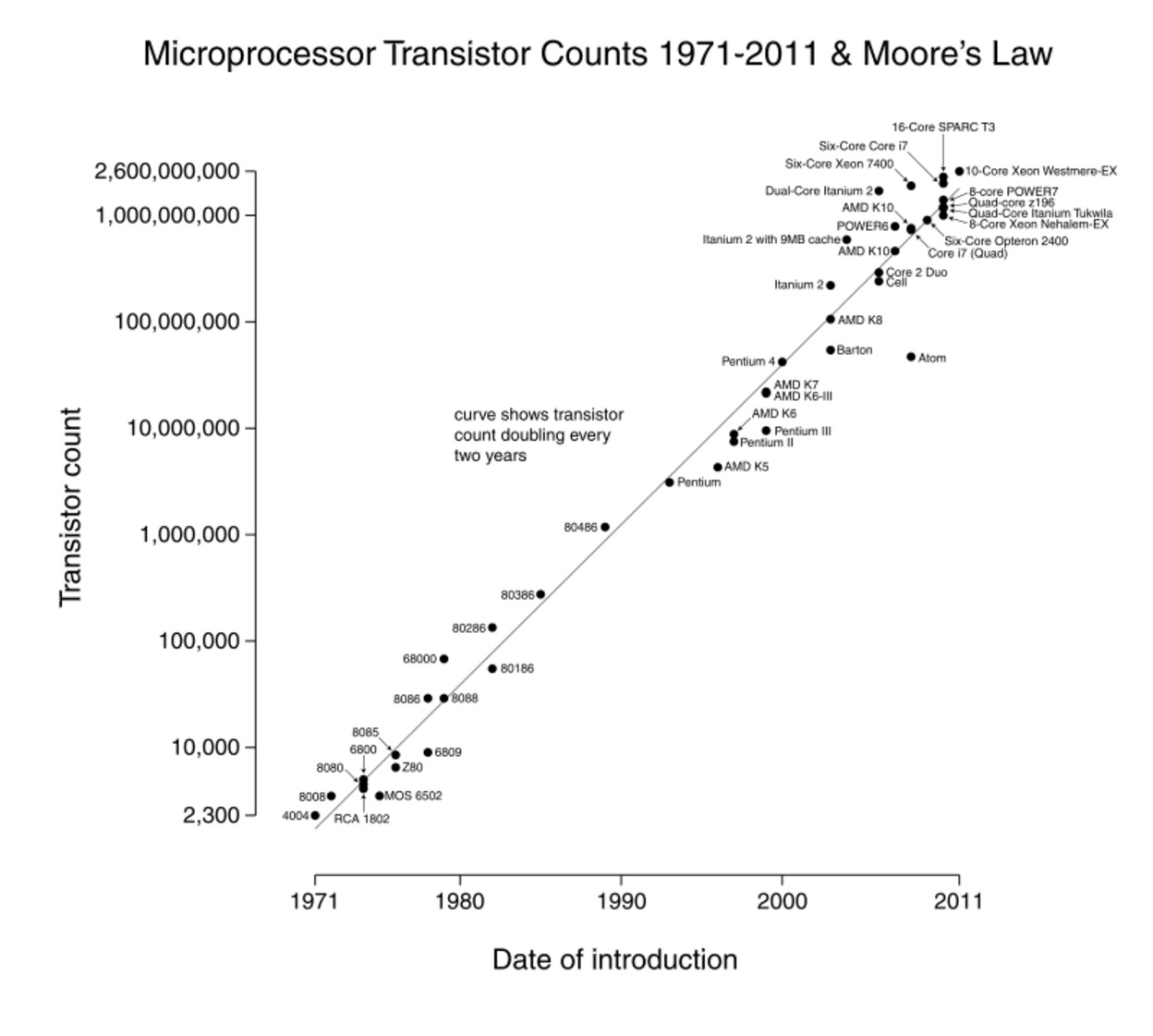

For 40 years, technology rode Moore’s Law to yield ever-more-powerful processors at lower cost. Its compounding effect was astounding: One of the best analogies is that we now have more processing power in a smart phone than the Apollo astronauts had when they landed on the moon. At the same time though, the electrical power requirements for those processors continued to increase at a similar rate as the increase in transistor count. While new technologies (CMOS, for example) provided a one-time step-down in power requirements, each turn-up in processor frequency and density resulted in similar power increases.

For 40 years, technology rode Moore’s Law to yield ever-more-powerful processors at lower cost. Its compounding effect was astounding: One of the best analogies is that we now have more processing power in a smart phone than the Apollo astronauts had when they landed on the moon. At the same time though, the electrical power requirements for those processors continued to increase at a similar rate as the increase in transistor count. While new technologies (CMOS, for example) provided a one-time step-down in power requirements, each turn-up in processor frequency and density resulted in similar power increases.

As a result, by the 2000-2005 timeframe there were industry concerns regarding the amount of power and cooling required for each rack in the data center. And with the enormous increase in servers spurred by Internet commerce, most IT shops have labored for the past decade to supply adequate data center power and cooling.

Meantime, most IT shops have experienced compute and storage growth rates of 20% to 50% a year, requiring either additional data centers or major increases in power and cooling capacity at existing centers. Since 2008, there has been some alleviation due to both slower business growth and the benefits of virtualization, which has let companies reduce their number of servers by as much as 10 to 1 for 30% to 70% of their footprint. But IT shops can deploy virtualization only once, suggesting that they’ll be staring at a data center build or major upgrade in the next few years.

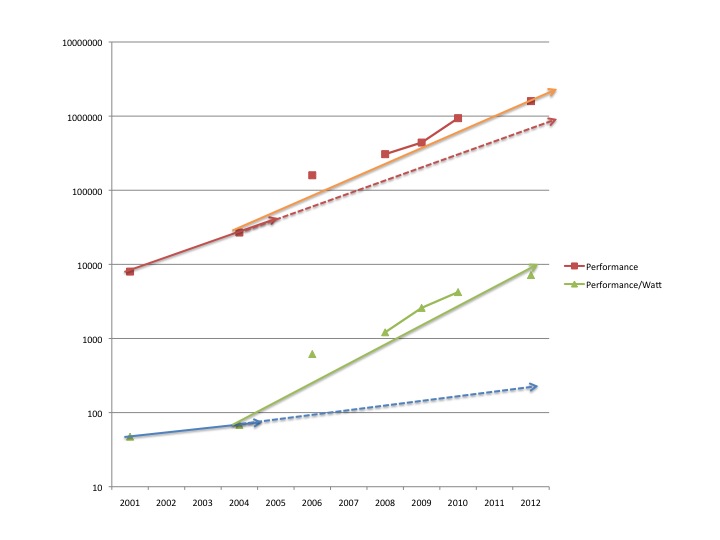

But an interesting thing has happened to server power efficiency. Before 2006, such efficiency improvements were nominal, represented by the solid blue line below. Even if your data center kept the number of servers steady but just migrated to the latest model, it would need significant increases in power and cooling. You’d experience greater compute performance, of course, but your power and cooling would increase in a corresponding fashion. Since 2006, however, compute efficiency (green line) has improved dramatically, even outpacing the improvement in processor performance (red lines).

The chart above shows how the compute efficiency (performance per watt — green line) has shifted dramatically from its historical trend (blue lines). And it’s improving about as fast as compute performance is improving (red lines), perhaps even faster. The chart above is for the HP DL 380 server line over the past decade, but most servers are showing a similar shift.

This stunning shift is likely to continue for several reasons. Power and cooling costs continue to be a significant proportion of overall server operating costs. Most companies now assess power efficiency when evaluating which server to buy. Server manufacturers can differentiate themselves by improving power efficiency. Furthermore, there’s a proliferation of appliances or “engineered stacks” that eke significantly better performance from conventional technology within a given power footprint.

A key underlying reason for future increases in compute efficiency is the fact that chipset technologies are increasingly driven by the requirements for consumer mobile devices. One of the most important requirements of the consumer market is improved battery life, which also places a premium on energy-efficient processors. Chip (and power efficiency) advances and designs in the consumer market will flow back into the corporate (server) market. An excellent example is HP’s Moonshot program which leverages ARM chips (previously typically used in consumer devices only) for a purported 80%+ reduction in power consumption. Expect this power efficiency trend to continue for the next five and possibly the next 10 years.

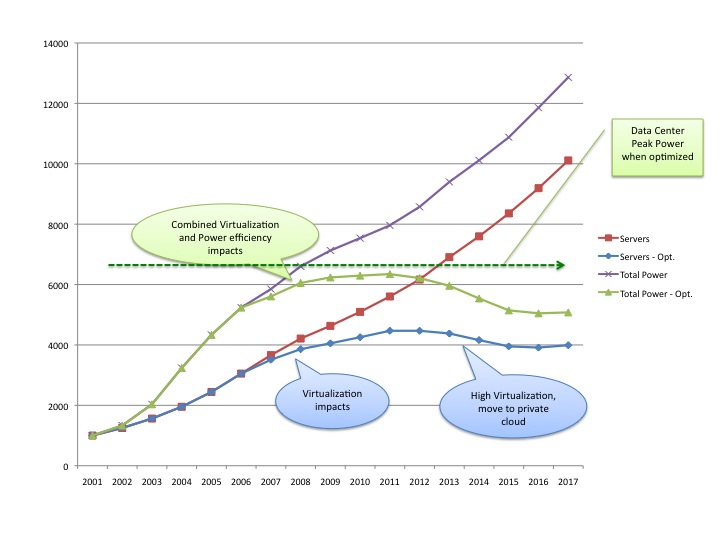

So how does this propitious trend impact the typical IT shop? For one thing, it reduces the need to build another data center. If you have some buffer room now in your data center and you can move most of your server estate to a private cloud (virtualized, heavily standardized, automated), then you will deliver more compute poer yet also see a leveling and then a reduction in the number of servers(blue line) and a similar trend in the power consumed (green line).

This analysis assumes 5% to 10% business growth, (translating to a need for a 15% to 20% increase in server performance/capacity). You’ll have to employ best practices in capacity and performance management to get the most from your server and storage pools, but the long-term payoff is big. If you don’t leverage these technologies and approaches, your future is the red and purple lines on the chart: ever-rising compute and data center costs over the coming years.

By applying these approaches, you can do more than stem the compute cost tide; you can turn it. Have you started this journey? Have you been able to reduce the total number of servers in your environment? Are you able to meet your current and future business needs and growth within your current data center footprint?

What changes or additions to this approach would you make? I look forward to your thoughts and perspective.

Best, Jim Ditmore

Note this post was first published on January 23rd in InformationWeek. It has been updated since then.

One thought on “Cloud Trends: Turning the Tide on Data Centers”