We have covered quite a bit of ground with previous posts on IT metrics but we have some important additions to the topic. The first, that we will cover today, is how to evolve your metrics to match your team’s maturity. (Next week, we will cover unit costs and allocations).

To ground our discussion, let’s first cover quickly the maturity levels of the team. Basing them heavily on the CMM, there are 5 levels:

- Ad hoc: A chaotic state with no established processes. Few measures are in place or accurate.

- Repeatable: The process is documented sufficiently and frequently used. Some measures are in place.

- Defined: Processes are defined and standard and highly adhered. Measures are routinely collected and analyzed.

- Managed: Processes are effectively controlled through the use of process metrics with some continuous process improvement (CPI).

- Optimized: Processes are optimized with statistical and CPI prevalent across all work.

It is important to match your IT metrics to the maturity of your team for several reasons:

- capturing metrics which are beyond the team’s maturity level will be difficult to gather and likely lack accuracy

- once gathered, there is potential for unreliable analysis and conclusions

- and it will be unlikely that actions taken can result in sustained changes by the team

- the difficulty and likely lack of progress and results can cause the team to negatively view any metrics or process improvement approach

Thus, before you start your team or organization on a metrics journey, ensure you understand their maturity so you can start the journey at the right place. If we take the primary activities of IT (production, projects, and routine services), you can map out the evolution of metrics by maturity as follows:

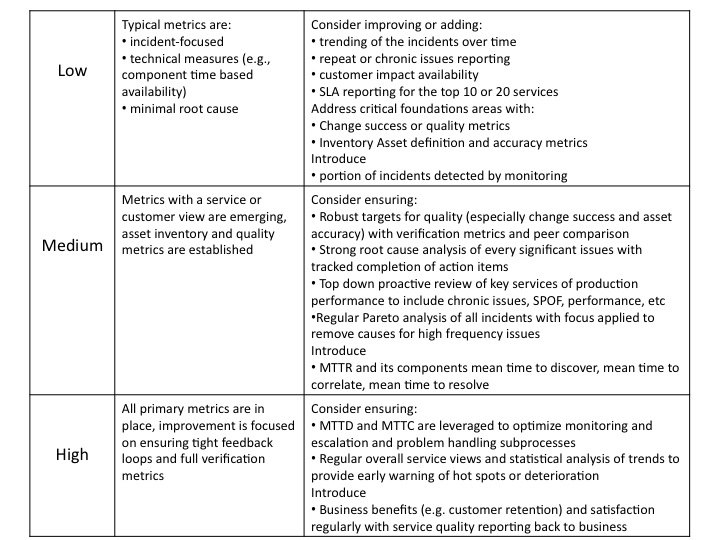

Production metrics – In moving from a low maturity environment to a high maturity, production metrics should evolve from typical inward-facing, component view measures of individual instances to customer view, service-oriented measures with both trend and pattern view as well and incident detail. Here is a detailed view:

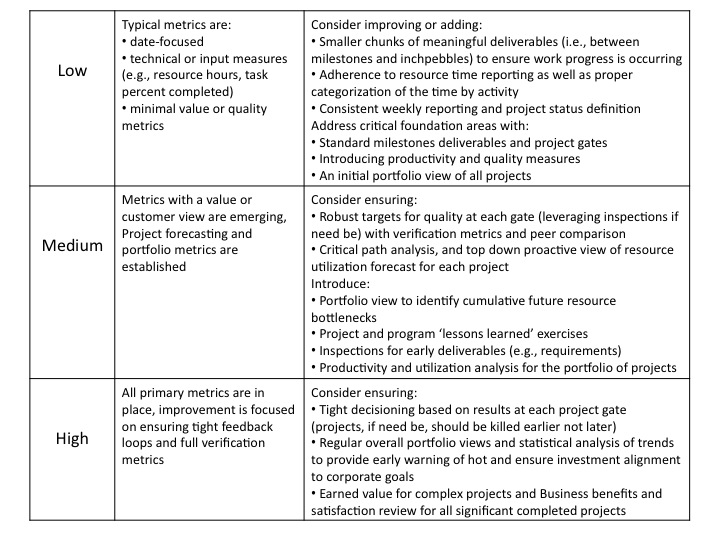

Project metrics – Measures in low maturity environments are project-centric usually focus on date and milestone with poor linkage to real work or quality. As the environment matures, more effective measures can be implemented that map actual work and quality as the work is being completed and provide accurate forecasts of project results. further, portfolio and program views and trends are available and leveraged.

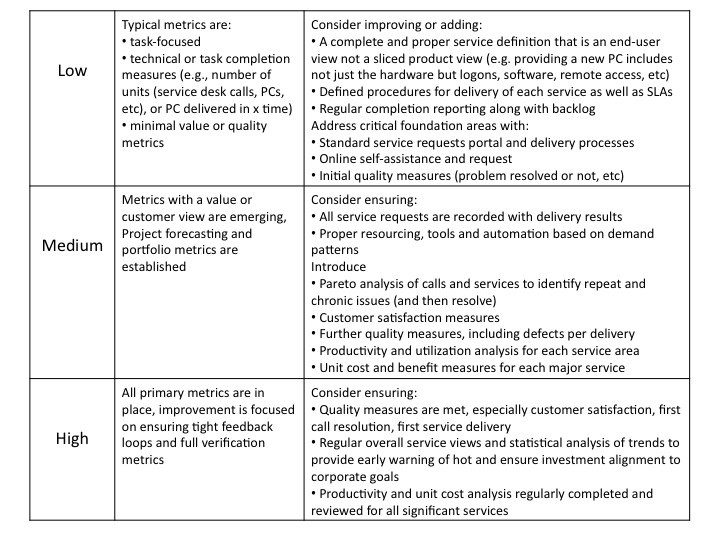

Routine Services – Low maturity measures are typically component or product-oriented at best within a strict SLA definition and lack a service view and customer satisfaction perspective. Higher maturity environments address these gaps and leverage unit costs, productivity, and unit quality within a context of business impact.

The general pattern is that as you move from low to medium and then to high maturity: you introduce process and service definition and accompanying metrics; you move from task or single project views to portfolio views; quality and value metrics are introduced and then exploited; and a customer or business value perspective becomes the prominent measure as to delivery success. Note that you cannot just jump to a high maturity approach as the level of discipline and understanding must be built over time with accumulating experience for the organization. To a degree, it is just like getting fit, you must go to the gym regularly and work hard – there is nothing in a bottle that will do it for you instead.

By matching the right level of metrics and proper next steps to your organization’s maturity, you will be rewarded with better delivery and higher quality, and your team will be able to progress and learn and leverage the next set of metrics. You will avoid a ‘bridge too far’ issue that often occurs when new leaders come into an organization that is less mature than their previous one, yet they impose the exact same regimen as they were familiar with previously. And then they fail to see why there are resultant problems and the blame either falls on the metrics framework imposed or the organization, when it is neither… it is the mismatch between the two.

And you will know your team has successfully completed their journey when they go from:

- Production incidents to customer impact to ability to accurately forecast service quality

- Production incidents to test defects to forecasted test defects to forecasted defects introduced to production

- Unit counts of devices to package offerings to customer satisfaction

- Unit counts or tasks to unit cost and performance measures to unit cost trajectories and performance trajectories

What has been your experience applying a metrics framework to a new organization? How have you adjusted it to ensure success with the new team?

Best, Jim Ditmore

![wignall[1]](https://www.recipeforit.com/wp-content/uploads/2012/02/wignall1-150x150.jpg)