Well, the summer is over, even if we have had great weather into September. My apologies for the delay in a new post, and I know I have several topic requests to fulfill 🙂 Given our own journey at Danske Bank on availability, I thought it was best to re-touch this topic and then come back around to other requests in my next posts. Enjoy and look forward to your comments!

It has been a tough few months for some US airlines with their IT systems availability. Hopefully, you were not caught up in the major delays and frustrations. Both Southwest and Delta suffered major outages in August and September. Add in power outages affecting equipment and multiple airlines recently in Newark, and you have many customers fuming over delays and cancelled flights. And the cost to the airlines was huge — Delta’s outage alone is estimated at $100M to $150M and that doesn’t include the reputation impact. And such outages are not limited to the US airlines, with British Airways also suffering a major outage in September. Delta and Southwest are not unique in their problems, both United and American suffered major failures and widespread impacts in 2015. Even with large IT budgets, and hundreds of millions invested in upgrades over the past few years, airlines are struggling to maintain service in the digital age. The reasons are straightforward:

- At their core, services are based on antiquated systems that have been partially refitted and upgraded over decades (the core reservation system is from the 1960s)

- Airlines have struggled earlier this decade to make a profit due to oil prices, and minimally invested in the IT systems to attack the technical debt. This was further complicated by multiple integrations that had to be executed due to mergers.

- As they have digitalized their customer interfaces and flight checkout procedures, the previous manual procedures are now backup steps that are infrequently exercised and woefully undermanned when IT systems do fail, resulting in massive service outages.

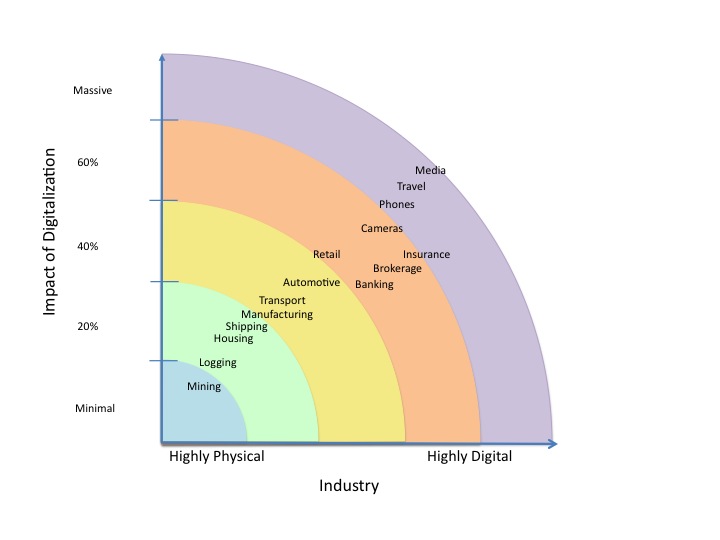

With digitalization reaching even further into the customer interfaces and operations, airlines, like many other industries, must invest in stabilizing their systems, address their technical debt, and get serious about availability. Some should start with the best practices in the previous post on Improving Availability, Where to Start. Others, like many IT shops, have decent availability but still have much to do to get to first quartile availability. If you have made good progress but realize that three 9’s or preferably four 9’s of availability on your key channels is critical for you to win in the digital age this post covers what you should do.

Let’s start with the foundation. If you can deliver consistently good availability, then your team should already understand:

- Availability is about quality. Poor availability is a quality issue. You must have a quality culture that emphasizes quality as a desired outcome and doing things right if you wish to achieve high availability.

- Most defects — which then cause outages — are injected by change. Thus, strong change management processes that identify and eliminate defects are critical to further reduce outages.

- Monitor and manage to minimize impact. A capable command center with proper monitoring feeds and strong incident management practices may not prevent the defect from occurring but it can greatly reduce the time to restore and the overall customer impact. This directly translates into higher availability.

- You must learn and improve from the issues. Your incident management process must be coupled with a disciplined root cause analysis that ensures teams identify and correct underlying causes that will avoid future issues. This continuous learning and improvement is key to reaching high performance.

With this base understanding, and presumably with only smoldering areas of problems for IT shop left, there are excellent extensions that will enable your team to move to first quartile availability with moderate but persistent effort. For many enterprises, this is now a highly desirable business goal. Reliable systems translate to reliable customer interfaces as customers access the heart of most companies systems now through internet and mobile applications, typically on a 7×24 basis. Your production performance becomes very evident, very fast to your customers. And if you are down, they cannot transact, you cannot service them, your company loses real revenue, and more importantly, damages it’s reputation, often badly. It is far better to address these problems and gain a key edge in the market by consistently meeting or exceeding costumer availability expectations.

First, if you have moved up from regularly fighting fires, then just because outages are not everyday, does not mean that IT leadership no longer needs to emphasize quality. Delivering high quality must be core to your culture and your engineering values. As IT leaders, you must continue to reiterate the importance of quality and demonstrate your commitment to these values by your actions. When there is enormous time pressure to deliver a release, but it is not ready, you delay it until the quality is appropriate. Or you release a lower quality pilot version, with properly set customer and business expectations, that is followed in a timely manner by a quality release. You ensure adequate investment in foundational quality by funding system upgrades and lifecycle efforts so technical debt does not increase. You reward teams for high quality engineering, and not for fire-fighting. You advocate inspections, or agile methods, that enable defects to be removed earlier in the lifecycle at lower cost. You invest in automated testing and verification that enables work to be assured of higher quality at much lower cost. You address redundancy and ensure resiliency in core infrastructure and systems. Single power cord servers still in your data center? Really?? Take care of these long-neglected issues. And if you are not sure, go look for these typical failure points (another being SPOF network connections). We used to call these ‘easter eggs’, as in the easter eggs that no one found in a preceding year’s easter egg hunt and then you find the old, and quite rotten, easter egg on your watch. It’s no fun, but it is far better to find them before they cause an outage.

Remember that quality is not achieved by not making mistakes — a zero defect goal is not the target — instead, quality is achieved by a continuous improvement approach where defects are analyzed and causes eliminated, where your team learns and applies best practices. Your target goal should be 1st quartile quality for your industry, that will provide competitive advantage. When you update the goals, also revisit and ensure you have aligned the rewards of your organization to match these quality goals.

Second, you should build on your robust change management process. To get to median capability, you should have already established clear change review teams, proper change windows and moved to deliveries through releases. Now, use the data to identify which groups are late in their preparation for changes, or where change defects are clustered around and why. These understandings can improve and streamline the change processes (yes, some of the late changes could be due to too many approvals required for example). Further clusters of issues may be due to specific steps being poorly performed or inadequate tools. For example, often verification is done as cursory task and thus seldom catches critical change defects. The result is that the defect is then only discovered in production, hours later, when your entire customer base is trying but cannot use the system. Of course, it is likely such an outage was entirely avoidable with adequate verification because you would have known at the time of the change that it had failed and could have take action then to back out the change. The failed change data is your gold mine of information to understand which groups need to improve and where they should improve. Importantly, be transparent with the data, publish the results by team and by root cause clusters. Transparency improves accountability. As an IT leader, you must then make the necessary investments and align efforts to correct the identified deficiencies and avoid future outages.

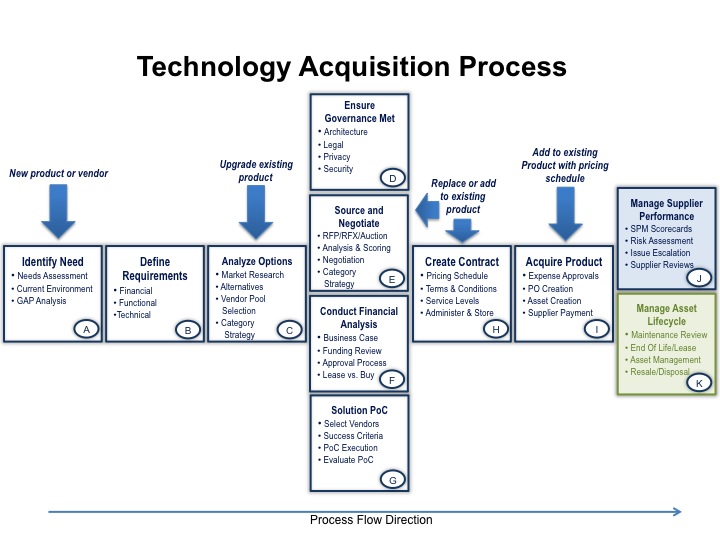

Further, you can extend the change process by introducing production ready. Production ready is when a system or major update can be introduced into production because it is ready on all the key performance aspects: security, recoverability, reliability, maintainability, usability, and operability. In our typical rush to deliver key features or products, the sustainability of the system is often neglected or omitted. By establishing the Operations team as the final approval gate for a major change to go into production, and leveraging the production ready criteria, organizations can ensure that these often neglected areas are attended to and properly delivered as part of the normal development process. These steps then enable a much higher performing system in production and avoid customer impacts. For a detailed definition of the production ready process, please see the reference page.

Third, ensure you have consolidated your monitoring and all significant customer impacting problems are routed through an enterprise command center via an effective incident management process. An Enterprise Command Center (ECC) is basically an enterprise version of a Network Operations Center or NOC, where all of your systems and infrastructure are monitored (not just networks). This modern ECC also has capability to facilitate and coordinate triage and resolution efforts for production issues. An effective ECC can bring together the right resources from across the enterprise and supporting vendors to diagnose and fix production issues while providing communication and updates to the rest of the enterprise. Delivering highly available systems requires an investment into an ECC and the supporting diagnostic and monitoring systems. Many companies have partially constructed the diagnostics or have siloed war rooms for some applications or infrastructure components. To fully and properly handle production issues these capabilities must be consolidated and integrated. Once you have an integrated ECC, you can extend it by moving from component monitoring to full channel monitoring. Full channel monitoring is where the entire stack for a critical customer channel (e.g. online banking for financial services or customer shopping for a retailer) has been instrumented so that a comprehensive view can be continuously monitored within the ECC. The instrumentation is such that not only are all the infrastructure components fully monitored but the databases, middleware, and software components are instrumented as well. Further, proxy transactions can and are run at a periodic basis to understand performance and if there are any issues. This level of instrumentation requires considerable investment — and thus is normally done only for the most critical channels. It also requires sophisticated toolsets such as AppDynamics. But full channel monitoring enables immediate detection of issues or service failures, and most importantly, enables very rapid correlation of where the fault lies. This rapid correlation can take incident impact from hours to minutes or even seconds. Automated recovery routines can be built to accelerate recovery from given scenarios and reduce impact to seconds. If your company’s revenue or service is highly dependent on such a channel, I would highly recommend the investment. A single severe outage that is avoided or greatly reduced can often pay for the entire instrumentation cost.

Fourth, you cannot be complacent about learning and improving. Whether from failed changes, incident pattern analysis, or industry trends and practices, you and your team should always be seeking to identify improvements. High performance or here, high quality, is never reached in one step, but instead in a series of many steps and adjustments. And given our IT systems themselves are dynamic and changing over time, we must be alert to new trends, new issues, and adjust.

Often, where we execute strong root cause and followup, we end up focused only at the individual issue or incident level. This can be all well and good for correcting the one issue, but if we miss broader patterns we can substantially undershoot optimal performance. As IT leaders, we must always consider the trees and the forest. It is important to not just get focused on fixing the individual incident and getting to root cause for that one incident but to also look for the overall trends and patterns of your issues. Do they cluster with one application or infrastructure component? Does a supplier contribute far too many issues? Is inadequate testing a common thread among incidents? Do you have some teams that create far more defects than the norm? Are your designs too complex? Are you using the products in a mainstream or unique manner – especially if you are seeing many OS or product defects? Use these patterns and analysis to identify the systemic issues your organization must fix. They may be process issues (e.g. poor testing), application or infrastructure issues (e.g., obsolete hardware), or other issues (e.g., lack of documentation, incompetent staff). Discuss these issues and analysis with your management team and engineering leads. Tackle fixing them as a team, with your quality goals prioritizing the efforts. By correcting things both individually and systemically you can achieve far greater progress. Again, the transparency of the discussions will increase accountability and open up your teams so everyone can focus on the real goals as opposed to hiding problems.

These four extensions to your initial efforts will set your team on a course to achieve top quartile availability. Of course, you must couple these efforts with diligent engagement by senior management, adequate investment, and disciplined execution. Unfortunately, even with all the right measures, providing robust availability for your customers is rarely a straight-line improvement. It is a complex endeavor that requires persistence and adjustment along the way. But by implementing these steps, you can enable sustainable and substantial progress and achieve top quartile performance to provide business advantage in today’s 7×24 digital world.

If your shop is struggling with high availability or major outages, look to apply these practices (or send your CIO the link to this page 🙂 ).

Best, Jim Ditmore