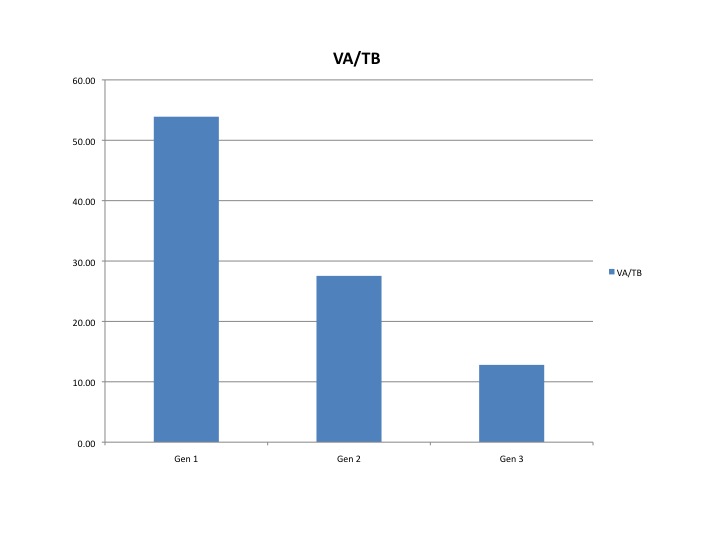

Recently I covered the ‘green shift’ of servers where each new server generation is not only driving major improvements in compute power but is also requires about the same or even less environmentals (power, cooling, space) as the previous generation. Thus, compute efficiency, or compute performance per watt, is improving exponentially. And this trend in servers, which started in 2005 or so, is also being repeated in storage. We have seen a similar improvement in power per terabyte for the past 3 generations (since 2007). Current storage product pipeline suggests this efficiency trend will continue for the next several years. Below is a chart showing representative improvements in storage efficiency (power per terabyte) across storage product generations from a leading vendor.

With current technology advances, a terabyte of storage on today’s devices requires approximately 1/5 of the amount of power as a device from 5 years ago. And these power requirements could drop even more precipitously with the advent of flash technology. By some estimates, there is a drop of 70% or more in power and space requirements with the switch to flash products. In addition to being far more power efficient, flash will offer huge performance advantages for applications with corresponding time reductions in completing workload. So expect flash storage to quickly convert the market once mainstream product introductions occur. IBM sees this as just around the corner, while other vendors see the flash conversion as 3 or more years out. In either scenario, there are continued major improvements in storage efficiency in the pipeline that deliver far lower power demands even with increasing storage requirements.

Ultimately, with the combined efficiency improvements of both storage and server environments over the next 3 to 5 years, most firms will see a net reduction in data center requirements. The typical corporate data center power requirements are approximately one half server, one third storage, and the rest being network and other devices. With the two biggest components experiencing ongoing dramatic power efficiency trends, the net power and space demand should decline in the coming years for all but the fastest growing firms. Add in the effects of virtualization, engineered stacks and SaaS and the data centers in place today should suffice for most firms if they maintain a healthy replacement pace of older technology and embrace virtualization.

Despite such improvements in efficiency, we still could see a major addition in total data center space because cloud and consumer firms like Facebook are investing major sums in new data centers. This resulting consumer data center boom also shows the effects of growing consumerization in the technology market place. Consumerization, which started with PCs and PC software, and then moved to smart phones, has impacted the underlying technologies dramatically. The most advanced compute chips are now those developed for smart phones and video games. Storage technology demand and advances are driven heavily by smart phones and products like the MacBook Air which already leverage only flash storage. The biggest and best data centers? No longer the domain of corporate demand, instead, consumer demand (e.g. Gmail, FaceBook, etc) drives bigger and more advanced centers. The proportion of data center space dedicated to direct consumer compute needs (a la GMail or Facebook) versus enterprise compute needs (even for companies that provide directly consumer services) will see a major shift from enterprise to consumer over the next decade. This will follow the shifts in chips and storage that at one time were driven by the enterprise space (and previously, the government) and are now driven by the consumer segment. And it is highly likely that there will be a surplus of enterprise class data centers (50K – 200K raised floor space) in the next 5 years. These centers are too small and inefficient for a consumer data center (500K – 2M or larger), and with declining demand and consolidation effects, plenty of enterprise data center space will be on the market.

As an IT leader, you should ensure your firm is riding the effects of the compute and storage efficiency trends. Further multiply these demand reduction effects by leveraging virtualization, engineered stacks and SaaS (where appropriate). If you have a healthy buffer of data center space now, you could avoid major investments and costs in data centers in the next 5 to 10 years by taking these measures. Those monies can instead be spent on functional investments that drive more direct business value or drop to the bottom line of your firm. If you have excess data centers, I recommend consolidating quickly and disposing of the space as soon as possible. These assets will be worth far less in the coming years with the likely oversupply. Perhaps you can partner with a cloud firm looking for data center space if your asset is strategic enough for them. Conversely, if you have minimal buffer and see continued higher business growth, it may be possible to acquire good data center assets for far less unit cost than in the past.

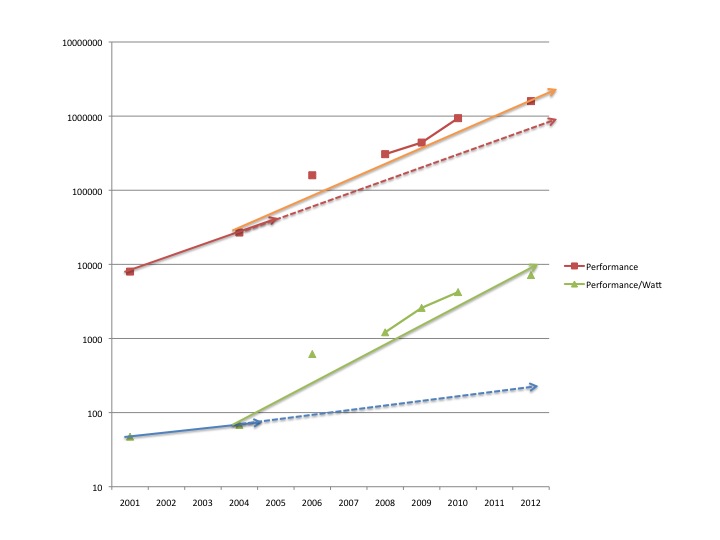

For 40 years, technology has ridden Moore’s Law to yield ever-more-powerful processors at lower cost. Its compounding effects have been astounding — and we are now seeing nearly 10 years of similar compounding on the power efficiency side of the equation (below is a chart for processor compute power advances and compute power efficiency advances).

The chart above shows how the compute efficiency (performance per watt — green line) has shifted dramatically from its historical trend (blue lines). And it’s improving about as fast as compute performance is improving (red lines), perhaps even faster.

These server and storage advances have resulted in fundamental changes in data centers and their demand trends for corporations. Top IT leaders will be take advantage of these trends and be able to direct more IT investment into business functionality and less into the supporting base utility costs of the data center, while still growing compute and storage capacities to meet business needs.

What trends are you seeing in your data center environment? Can you turn the corner on data center demand ? Are you able to meet your current and future business needs and growth within your current data center footprint and avoid adding data center capacity?

Best, Jim Ditmore